RISC-V

The rise of collaborative open-source technology, such as RISC-V, has been one of the biggest technology changes in recent years. Using an industry-standard Instruction Set Architecture (ISA) based on established RISC principles, Axelera AI both contributes to and benefits from the significant investments in developing the RISC-V ecosystem. This has already seen Tensorflow lite ported onto a RISC-V processor core for Edge AI applications including sensor data evaluation, gesture control, or vibration analysis.

We have a strong RISC-V team with significant contributions.

More than 15 publications and counting

Our recent RISC-V publications

• Spatz: A Compact Vector Processing Unit for High-Performance and Energy-Efficient Shared-L1 Clusters

• MiniFloat-NN and ExSdotp: An ISA Extension and a Modular Open Hardware Unit for Low-Precision Training on RISC-V cores

• Banshee: A Fast LLVM-Based RISC-V Binary Translator

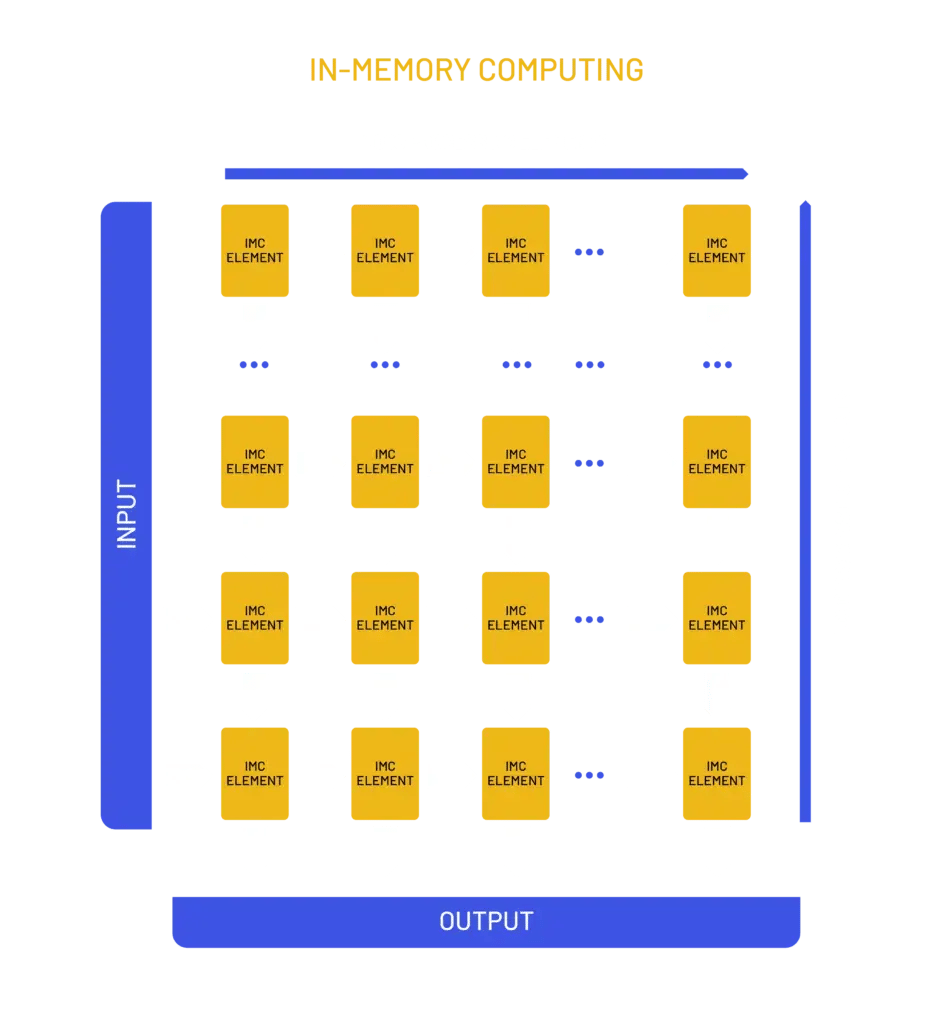

Standard CMOS processing

A major advantage of Axelera AI systems is that its accelerator technology has been implemented in standard CMOS technology. Our SRAM-based D-IMC design uses proven, cost-effective and easily accessible materials and manufacturing processes, readily available to foundries. Memory technologies are also a key driver for lower lithography nodes. So, Axelera AI will be able to easily scale performance as the semiconductor industry brings advanced lithography nodes into volume production.

Factory agnostic simplifying supply chains

Neural Network optimization

One of the biggest challenges for Edge AI is optimizing neural networks to run efficiently when ported onto a mixed-precision accelerator solution. Our technology includes proven quantization techniques and mapping tools that significantly reduce AI computational load and increase energy efficiency. By employing a generic quantization flow methodology ensures our systems can be applied to a wide range of networks while minimizing accuracy loss. In fact, compared to a ResNet-50 neural network model running with Floating Point 32 (FP-32), Axelera AI’s post-training quantization technique achieves a relative accuracy of 99.9%.

99.9% relative accuracy

FP32 Equivalent Accuracy

Read on

Decoding Transformers on Edge Devices

July 12, 2023

Cheap Computing and the Balancing Act of Population Decline

June 14, 2023

Insights and Trends in Machine Learning for Computer Vision

May 12, 2022